Unlocking Secure Data Collaboration: A Technical Introduction to OpenMined

1. How Privacy-Preserving Data Collaboration Solves AI’s Data Bottleneck

1.1 The 0.01% Data Utilization Problem

Modern AI systems rely on less than 0.01% of the world’s available data, largely because organizations face challenges in safely sharing or accessing sensitive information without proper security controls. OpenMined, a global nonprofit building secure infrastructure for non-public information, is pioneering new ways to enable research and AI development without exposing or relocating sensitive datasets.

1.2 Why Privacy-Preserving Data Collaboration Outperforms Centralized Models

Traditional approaches attempt to centralize data in a single repository, but this creates multiple challenges:

• High breach risk

• Single points of failure

• Loss of control for data owners

Centralized data lakes are structurally incapable of handling regulated or high-sensitivity information at global scale.

Traditional approaches attempt to centralize data in a single repository, but this can create challenges for scalability depending on the implementation and use case.

1.3 Regulatory and Privacy Barriers

Laws like GDPR, HIPAA, CCPA, FERPA, and sector-specific security frameworks restrict how sensitive data can move, be processed, or be shared. Even when legal sharing is possible, public trust issues and organizational risk aversion limit collaboration.

1.4 The Need for Secure, Distributed Access

To unlock the majority of global data, AI must evolve from a “collect and compute” model to a “compute where data lives” architecture. This is the foundation of OpenMined’s mission.

2. OpenMined’s Mission and System Architecture

2.1 “Public Network for Non-Public Information”

OpenMined aims to build a global infrastructure layer that allows researchers and institutions to query sensitive data without ever taking possession of it. Data remains local; computation travels to the source.

Traditional open/closed-source AI systems (left) copy data to AI providers, giving them unilateral control over the resulting model and its predictions. Instead, ABC-enabled AI systems (right) enable direct communication between those with data and those seeking insights. In ABC-enabled AI (Atribution Based Control), attribution and control flow with the information, enabling data sources to retain control over which predictions they seek to support.

2.2 Principles of Data Autonomy and Local Control

OpenMined enforces:

• Data stays under the governance of its owner

• Non-public information is never centralized

• All computation is permissioned

• Auditable action trails ensure accountability

2.3 Governance Models for Privacy-Preserving Data Collaboration

A decentralized approach allows multiple institutions to enforce their own privacy rules, access control, and compliance frameworks. This contrasts with centralized data-sharing hubs, which impose uniform policies that often cannot accommodate all regulatory contexts.

2.4 High-Level Architecture of OpenMined Tools

At a high level, OpenMined provides:

• A network of secure enclaves (SyftBox)

• A computation layer built on PETs (via PySyft)

• A governance layer for permissions and audits

• A distributed registry for discovering datasets and models

This architecture enables federation at scale. OpenMined’s architecture is designed specifically to support privacy-preserving data collaboration across institutions that cannot move or centralize their datasets.

3. Privacy-Preserving Data Collaboration Through PETs

3.1 Differential Privacy

Differential Privacy (DP) introduces mathematically controlled noise into outputs, ensuring no individual’s data can be reverse-engineered.

DP is a valuable approach for statistical reporting, monitoring, and pattern analysis.

3.2 Federated Learning

Federated Learning (FL) sends model updates—not raw data—to a central coordinator. Data never leaves its origin. Secure aggregation ensures individual updates cannot be identified.

3.3 Secure Multiparty Computation (SMPC)

SMPC allows multiple entities to jointly compute a function while keeping inputs private. Through secret sharing techniques, no party learns another’s data, yet all can participate in computation.

3.4 Homomorphic Encryption

Homomorphic Encryption (HE) enables computation directly on encrypted data. While historically slow, HE is critical for high-sensitivity workloads where even plaintext computation is unacceptable.

3.5 PET Combinations That Enable Privacy-Preserving Data Collaboration

Each PET has strengths and limitations. OpenMined’s innovation is combining PETs so that computations remain secure at every stage—ingest, training, inference, and audit.

4. OpenMined’s Open-Source Tooling

4.1 PySyft

PySyft is a privacy-preserving tensor framework integrating DP, SMPC, and federated learning semantics directly into the computation graph. It allows developers to create secure workflows in familiar Pythonic patterns.

4.2 SyftBox

SyftBox provides secure data enclaves for institutions. It handles:

• Local policy enforcement

• Encrypted storage

• Access control

• Monitoring and logging

• Deployment across hospitals, agencies, or labs

SyftBox is the physical anchor of the OpenMined network.

4.3 Distributed Knowledge Graphs

OpenMined is building tooling for privacy-preserving knowledge federation—allowing institutions to link insights without exposing underlying datasets.

4.4 Supporting Infrastructure (Auth, Permissions, Audits)

OpenMined includes components for authentication, permissioning, and auditable logs. These systems ensure accountability, reproducibility, and regulatory alignment.

5. Trust, Governance, and Compliance

5.1 Data Stewardship in Distributed Systems

True data stewardship requires maintaining control at the site of origin. OpenMined’s governance model enforces local autonomy with global interoperability.

5.2 Alignment with GDPR, HIPAA, CCPA, FERPA

PET-based frameworks align naturally with modern privacy laws by minimizing data movement and enforcing least-privilege access.

5.3 Verification, Transparency, and Reproducibility

Each computation request, approval, execution, and output is logged. This provides traceability for auditing bodies and reproducibility for researchers.

5.4 Privacy-Preserving Audit Trails

Encrypted logs make it possible to audit without revealing sensitive underlying data—an important shift in accountability structures.

6. Real-World Uses of Privacy-Preserving Data Collaboration

6.1 Multi-Institution Medical Research

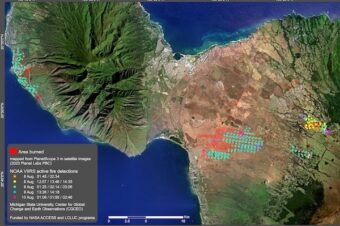

OpenMined enables cross-hospital studies without transferring patient records—a major step forward for epidemiology, oncology, and rare-disease research.

6.2 Policy Modeling Using Sensitive Government Data

Governments can run simulations on census, welfare, tax, and mobility data without exposing citizens’ identities.

6.3 Cross-Border Financial Risk Analysis

Banks and regulators can model fraud, systemic risk, and compliance scenarios across jurisdictions with strict data sovereignty requirements.

6.4 Performance Factors in Privacy-Preserving Data Collaboration

PETs introduce overhead, but strategic pipeline design and hybrid PET combinations allow practical performance in real-world deployments.

7. The Road Ahead

7.1 The Rise of Decentralized AI

OpenMined aligns with emerging architectures where computation happens across distributed nodes instead of centralized clouds.

7.2 Integration with Next-Generation AI Models

Future models will increasingly require sensitive data. OpenMined provides the structures necessary for safe, compliant access.

7.3 Future Research in Privacy-Preserving Computation

Active areas include improved HE performance, hybrid PET orchestration, and privacy-preserving evaluation methods.

7.4 Limitations and Open Challenges

Challenges include PET performance costs, interoperability standards, and the need for global adoption.

As AI systems evolve, privacy-preserving data collaboration will become a foundational requirement for any organization working with regulated or high-sensitivity information.

8. Conclusion

8.1 The Need for Secure Data Collaboration

Safely accessing sensitive data is the central challenge for modern AI.

8.2 OpenMined as Critical Infrastructure

OpenMined provides the technical and governance foundations needed for AI systems to evolve responsibly.

8.3 Invitation to Research, Build, and Participate

Researchers, developers, agencies, and institutions can join a global effort to unlock knowledge while preserving privacy.

By enabling privacy-preserving data collaboration, OpenMined provides the technical groundwork for global-scale research while maintaining strict privacy controls.

To learn more about the organization’s mission and open-source tools, visit https://openmined.org/

For more articles exploring emerging technologies and digital governance, visit the NKO.org Blog https://nko.org/blog/